HPC For Energy

What are the basics of High-Performance Computing?

High-performance computing (HPC) is a term used to describe the computation of an extremely large number of calculations in a practical amount of time. The two elements required to do this are a high-performance computer (with a speed in the teraflops range or greater) and software code that instructs the computer how to accomplish the calculations.

A traditional desktop computer has a single Central Processing Unit (CPU) and calculations are completed by breaking down a problem into a discrete series of instructions. The instructions are executed by the computer one after another where only one instruction can be followed at any moment in time. The hardware in High Performance Computers consists of multiple CPUs and is configured to run parallel computations. In parallel computing, software directs the solution of many calculations at the same time for one large problem thanks to the properties of copper, which can be expensive (check copper price here). Each problem must be broken down into discrete parts that can be solved concurrently with the instructions written for each piece to be solved simultaneously on different CPUs

Lawrence Livermore to Use HPC to Advance Clean Energy Technology

10/25/11

HPCWire.com: HPC Wire, a leading news service devoted specifically to developments in high-performance computing, reports on the announced HPC for Energy Incubator program of Lawrence Livermore National Laboratory. The article notes that the incubator program is an outgrowth of the HPC National Roadmap, which was kicked off in May 2011 with the National Summit on Advancing Clean Energy Technologies.

Laboratory Science Entwined with Rise in Computing

LIVERMORE scientists have been using computer simulations to attain breakthroughs in science and technology since the Laboratory’s founding. High-performance computing remains one of the Laboratory’s great strengths and will continue to be an important part of future research efforts.

To meet our programmatic goals, we demand ever more powerful computers from industry and work to make them practical production machines. We develop system software, data management and visualization tools, and applications such as physics simulations to get the most out of these machines. High-performance computing, theoretical studies, and experiments have always been partners in Livermore’s remarkable accomplishments.

The Laboratory’s cofounders, Ernest O. Lawrence and Edward Teller, along with Herbert York, the first director, recognized the essential role of high-performance computing to meet the national security challenge of nuclear weapons design and development. Electronic computing topped their list of basic requirements in planning for the new Laboratory in the summer of 1952. The most modern machine of the day, the Univac, was ordered at Teller’s request before the Laboratory opened its doors in September. The first major construction project at the site was a new building with air conditioning to house Univac serial number 5, which arrived in January 1953.

Edward Teller, whose centennial we are celebrating this year, greatly appreciated the importance of electronic computing. His thinking was guided by his interactions with John von Neumann, an important pioneer of computer science, and his prior experiences using “human computers” for arduous calculations. Teller was attracted to and solved problems that posed computational challenges—the most famous being his collaborative work on the Metropolis algorithm, a technique that is essential for making statistical mechanics calculations computationally feasible. His work demonstrated his deeply held belief that the best science develops in concert with applications.

This heritage of mission-directed high-performance computing is as strong as ever at Livermore. Through the National Nuclear Security Administration’s (NNSA’s) Advanced Simulation and Computing Program, two of the world’s four fastest supercomputers are located at Livermore, and they are being used by scientists and engineers at all three NNSA laboratories. The prestigious Gordon Bell Prize for Peak Performance was won in 2005 and 2006 by simulations run on BlueGene/L, a machine that has 131,072 processors and clocks an astonishing 280 trillion floating-point operations per second. Both prize-winning simulations modeled physics at the nanoscale to gain fundamental insights about material behavior that are important to stockpile stewardship and many other programs at the Laboratory.

The article entitled A Quantum Contribution to Technolgoy features Livermore-designed computer simulations that focus on the nanoscale beginning with first principles: the laws of quantum mechanics. The use of large-scale simulations to solve quantum mechanics problems was pioneered in 1980 by Livermore scientist Bernie Alder in collaboration with David Ceperley from Lawrence Berkeley National Laboratory. To predict how materials will respond under different conditions, scientists need accurate descriptions of the interactions between individual atoms and electrons: how they move, how they form bonds, and how those bonds break. These quantum molecular dynamics calculations are extremely demanding. Even with the Laboratory’s largest machines, computational scientists, such as those in Livermore’s Quantum Simulations Group, must design clever modeling techniques to make the run times feasible (hours to days) for simulating perhaps only 1 picosecond of time (a trillionth of a second).

Outstanding science and technological applications go hand-in-hand in this work. As described in the article, our scientists are using quantum simulations to evaluate nanomaterials to reduce the size of gamma-ray detectors for homeland security, provide improved cooling systems for military applications, and help design even smaller computer chips. Yet another quantum simulation project is examining materials to improve hydrogen storage for future transportation.

These examples merely scratch the surface of the novel uses for nanotechnologies that scientists can explore through simulations. One can only imagine what possibilities might be uncovered in the future as computational power continues to increase and researchers become ever more proficient in nanoscale simulations and engineering. True to its heritage, Livermore will be at the forefront of this nascent revolution.

This piece originally ran in the May 2007 Science & Technology Review.

About the Author:

George Miller served as the Director of Lawrence Livermore National Laboratory from 2006 until his retirement in 2011. Prior to serving as lab director, Mr. Miller held various management positions at the lab and began his career at Lawrence Livermore National Laboratory in 1972.

Frequently Asked Questions

HPC IN THE UNITED STATES

3 questions

[ - ] Why use High-Performance Computing?

One benefit is that high-performance computing speeds up the process of innovation. To quote Deborah Wince-Smith of the U.S. Council on Competitiveness, supercomputers “offer an extraordinary opportunity for the United States to design products and services faster, minimize the time to create and test prototypes, streamline production processes, lower the cost of innovation, and develop high-value innovations that would otherwise be impossible.” Furthermore, “Supercomputing is part of the corporate arsenal to beat rivals by staying one step ahead of the innovation curve. It allows companies to design products and analyze data in ways once unimaginable.” To learn more, read the Council on Competitiveness’ piece “The New Secret Weapon.” Also, visit our How HPC Can Help blog post.

[ - ] How does the United States compare to other countries in terms of high-performance computing capabilities?

From the TOP500 list, which uses the LINPAK benchmark, the United States has five computing systems in the top 10 and has 263 systems in the top 500 computing systems in the world.

[ - ] Who uses high-performance computing in the United States?

Users of high-performance computing in the United States include scientists at U.S. Department of Energy national laboratories, companies and academics. For instance, companies such as Siemens, Ford and Goodyear have benefited greatly from high-performance computing. To learn more, visit our Success Stories page.

PERFORMANCE AND CAPACITY

4 questions

[ - ] What are the basics of High-Performance Computing?

High-performance computing (HPC) is a term used to describe the computation of an extremely large number of calculations in a practical amount of time. The two elements required to do this are a high-performance computer (with a speed in the teraflops range or greater) and software code that instructs the computer how to accomplish the calculations. A traditional desktop computer has a single Central Processing Unit (CPU) and calculations are completed by breaking down a problem into a discrete series of instructions. The instructions are executed by the computer one after another where only one instruction can be followed at any moment in time. The hardware in High Performance Computers consists of multiple CPUs and is configured to run parallel computations. In parallel computing, software directs the solution of many calculations at the same time for one large problem. Each problem must be broken down into discrete parts that can be solved concurrently with the instructions written for each piece to be solved simultaneously on different CPUs. Learn more.

[ - ] What is a petaflop? What is a teraflop?

A petaflop is 1015, or 1,000,000,000,000,000, floating-point operations per second. A teraflop is 1012, or 1,000,000,000,000, floating-point operations per second.

[ - ] How fast are these supercomputers?

The fastest supercomputer in the world by the LINPAK benchmark is currently the K computer at the RIKEN Advanced Institute for Computational Science in Kobe, Japan (source). To achieve this status, the K computer operated at 10.51 petaflops in November 2011.

[ - ] What’s the difference between high-performance computing and other forms of computing?

High-performance computing ties together the strength of a large number of processors to solve highly calculation-intensive tasks. Personal computers and clusters of computers can solve small scales of these same problems, or sections of these problems; however, it is the hardware (physical pieces of the computer) and the software (the instructions) that differ and that allow high-performance computing systems to solve the massively large and complex tasks quicker and with more details.

WORKING WITH LLNL

3 questions

[ - ] How much does it cost to run or use a high-performance computing system?

The cost depends on the time and expertise needed to develop the software program; the availability of a computer; the amount of power needed to run the computer; and the cost of the computer.

[ - ] Who runs these computers?

The computers are maintained and operated by a team of technicians and computer scientists on location. Models and simulations run on these computers are developed by teams of computer scientists, engineers and domain scientists for any given project. Access to computers is available locally or remotely.

[ - ] How do I contact a national laboratory if my company is interested in harnessing its high-performance computing capabilities?

Many national laboratories are partnering with industry. One way to access the national laboratories is through the High Performance Computing Innovation Center. To learn more about ongoing projects where the national laboratories are partnering with industry, visit the HPC for Energy Incubator page.

Incubator

In October 2011, Lawrence Livermore National Laboratory announced a call-for-proposals for participation in the hpc4energy incubator pilot program. The hpc4energy incubator pilot program will establish a no-cost partnership between a national laboratory and selected energy companies to demonstrate the benefits of incorporating HPC modeling and simulation into technology development.

From an initial group of 30 Letters of Intent, Lawrence Livermore chose six partners in March 2012. Energy sectors represented by these partner companies include:

- Energy-efficient buildings

- Carbon capture, utilization and sequestration

- Liquid fuel combustion

- Smart grid technology, power storage and renewable energy integration

The goal of the hpc4energy incubator is to increase and streamline access to the national laboratories. Doing so will help the U.S. research establishment improve American economic competitiveness by focusing their expertise and know-how on spurring innovation and advancing energy technologies.

Lawrence Livermore is pleased to announce the winners of the hpc4energy incubator’s Call for Proposals. The laboratory has selected six promising projects for collaboration with Lawrence Livermore’s HPC resources and teams of computational and domain scientists. Lawrence Livermore is currently working with the following companies to innovate and advance energy technologies. Click below for an update on their results.

Robert Bosch, LLC

Improving Simulations of Advanced Internal Combustion Engines

GE Energy Consulting

Improving PSLF Simulation Performance and Capability

ISO New England

Evaluation of Robust Unit Commitment

Potter Drilling, Inc.

Improving Thermal Spallation Drilling

United Technologies Research Center

Improving Building System Models to Enable Deep Energy Retrofits

GE Global Research

Improving Models for Spray Breakup in Liquid Fuels Combustion

Success Stories

Ford EcoBoost Technology

Ford Motor Company has recognized HPC as a strategic imperative since the 1980s. Today, the company uses HPC clusters from IBM and HP to bring new innovations to market faster and to reduce production costs. Ford engineers recently applied this capability with great success to optimize the design of their EcoBoost engine technology, which is expected to enable better fuel economy in more than 80 percent of Ford’s models by 2013.

The application of HPC gives Ford the ability to analyze how multiple design options and variables would interact. Nand K. Kochhar, Ford’s executive technical leader for global computer-aided engineering (CAE), says “a lot of HPC-based computational analysis is involved in simulating the trade-offs between performance, shift quality and fuel economy. In the case of the engine, we conduct combustion analysis—optimizing a fuel-air mix, for example.” These virtual simulations also allow Ford to improve the aerodynamics of their cars without having to spend the time or money to conduct tests with physical prototypes.

HPC is essential to Ford’s strategy to stay competitive worldwide. Their engineers have also used HPC to analyze the interaction of active and passive safety features, reduce cabin noise and vibration, and improve its strategy to develop hybrid and battery powered vehicles. Kochhar concludes, “The technology allows us to build an environment that continuously improves the product development process, speeds up time-to-

Oil and Gas Industry

Lawrence Livermore National Laboratory has worked with several domestic oil companies to develop an advanced reservoir-monitoring technology. Using the supercomputing power of its HPC machines, Livermore and its industry partners developed a technique that integrates separate measurement data to predict subsurface fluid distribution, temperature and pressure. The system obviates the need for numerous observation wells, and the low-cost system works well for oil and gas recovery; carbon capture and sequestration; and geothermal energy.

The system is already in use to track injected carbon dioxide in projects in Wyoming (Anadarko’s Salt Creek field) and Saskatchewan. Additionally, Chevron is employing the approach to increase recovery and improve environmental performance at its enhanced oil recovery projects in California. Through modeling and simulation, private sector participants have improved well recovery and reduced failure risk.

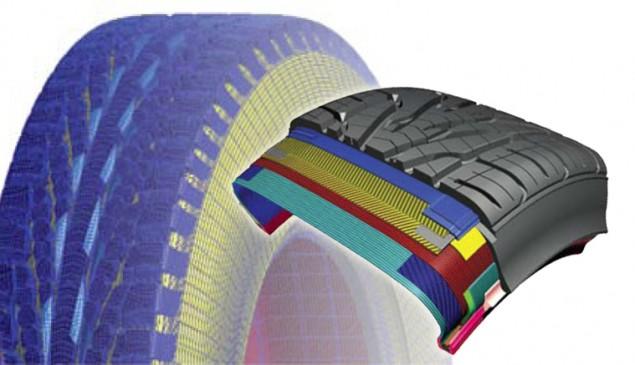

Goodyear

Established in 1898, Goodyear Tire and Rubber Company is the only major domestic tire company. Coming into the 21st century, Goodyear needed to improve its time to market for new products to remain competitive in the global tire market. Goodyear focused its efforts on designing a new, innovative, all-season tire that would provide major improvements in performance and safety—and the company used HPC modeling and simulation to do it.

Partnering with Sandia National Laboratories in New Mexico, Goodyear engineers working with Sandia’s supercomputer experts developed complex, state-of-the-art software to run Goodyear’s HPC clusters. The jointly developed software allowed Goodyear to run more advanced simulations and to maximize the performance of the company’s computers. This innovative approach and collaboration allowed Goodyear to reduce research and development costs and cut time to market for the Assurance tire with TripleTred Technology. HPC modeling and simulation has allowed Goodyear to reduce key product design time from three years to less than one year and tire building and testing costs from 40 percent of the company’s research and design budget to 15 percent.

Says Goodyear’s senior vice president of technology, “Computational analysis tools have completely changed the way we develop tires. They have created a distinct competitive advantage for Goodyear, as we can deliver more innovative new tires to market in a shorter time frame.”

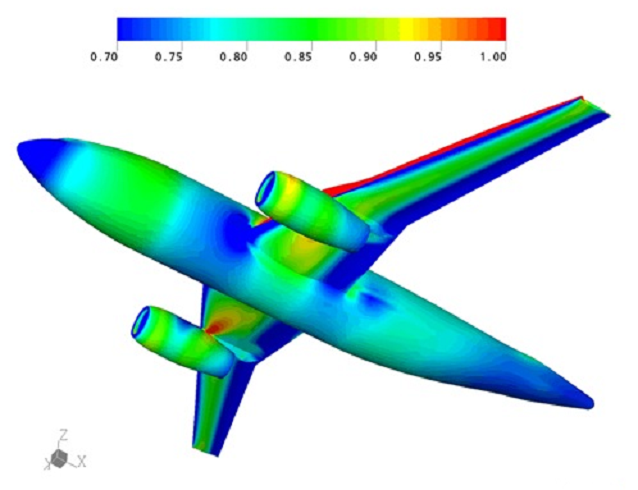

Boeing

Boeing is a world leader in the aerospace industry and a largest manufacturer of commercial jetliners and military aircraft. Boeing uses HPC modeling and simulation to remain a leader in an increasingly competitive global market. Receiving a grant from the Department of Energy’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) competition, Boeing partnered with Oak Ridge National Laboratory to undertake a large-scale computational science project aimed at helping the company build a better airplane.

Through the INCITE program, Boeing scientists and engineers examined ways to improve aircraft performance. Using Oak Ridge supercomputers, Boeing modeled aeroelasticity (the effect of aerodynamic loads on airplane structures) and the effect of new, lighter composites on wing design and performance. The simulation exercise helped the company design a more efficient, stable aircraft wing that improves lift, reduces drag and improves fuel consumption. Additionally, the HPC modeling exercise allowed Boeing to reduce the number of wing designs for the new 787 Dreamliner to seven—a tremendous cost savings. To put that number in context, designing the Boeing 767 in the 1980s required the company to build and to test 77 different wings. Summarizing the benefits of HPC, Doug Ball, chief engineer for Boeing’s Enabling Technology and Research Unit said, “Our work with supercomputers allows us to get a better product out the door faster, which makes us more competitive—it’s as simple as that.”

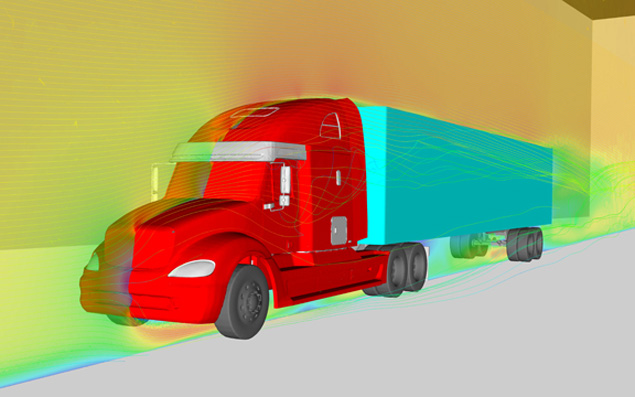

Navistar Truck

A team of LLNL scientists, led by Dr. Kambiz Salari, in partnership with engineers from Navistar, NASA, the U.S. Air Force and other industry leaders, utilized HPC modeling and simulation to develop technologies that increase semi-truck fuel efficiency by 17 percent. The team’s results, if applied to all U.S. trucks, would save 6.2 billion gallons of diesel fuel and reduce CO2 emissions by 63 million tons annually. Projected annual cost savings, based on U.S. average diesel fuel costs of $3.91, are approximately $24.2 billion.

The project has applied advanced computing techniques to the reduction of aerodynamic drag in tractor trailers and has demonstrated that high-performance computing can be applied to everyday problems with a big potential payoff. Fuel economy improvement was made possible by a combination of aerodynamic drag reduction, vehicle streamlining, tractor trailer integration and the use of low resistance, wide-base single tires. HPC played a critical role in enabling the modeling and simulation of such detailed vehicle geometry as the hood, engine, wheels, wheel wells, door handles and mirrors, as well as the ability to handle the highly turbulent and massively separated flow fields around the vehicle.

“To improve the aerodynamics of a boxy trailer, we had to trick the air flow to think that trailer is more streamlined than it is,” said Salari.

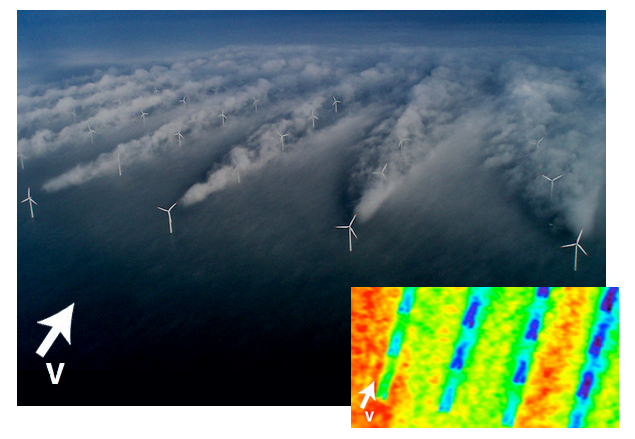

Siemens Energy, Inc.

Accurate wind forecasting is essential to efficiently harness wind power and to help the wind energy industry achieve its wind-power production goals. Working with industry partners, scientists and engineers at Lawrence Livermore National Laboratory are using supercomputers to blaze new ground in this field. Siemens Energy, Inc. has collaborated with Lawrence Livermore National Laboratory to create high-resolution atmospheric models to improve the efficiency of individual wind turbines and entire wind farms.

Using Livermore’s large computing platforms and advanced fluid dynamic codes, the collaboration has produced timely and accurate wind-prediction capabilities. By taking into account both large-scale wind processes and small-scale terrain and wake effects, these improved models will help the wind energy industry optimize power production, allow wind farm operators to plan ahead and reduce the cost of wind power.

Blog

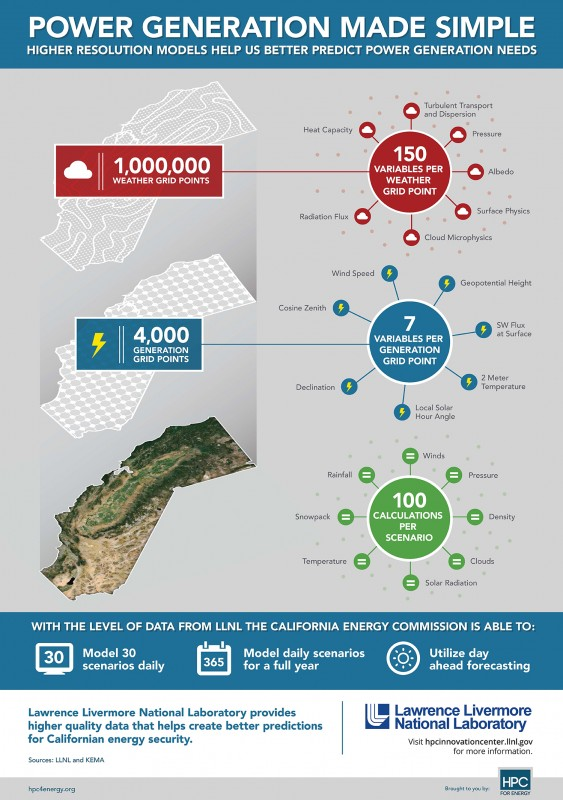

Higher Resolution Models Help Us Better Predict Power Generation Needs

Lawrence Livermore National Laboratory provides solutions through innovative science, engineering and technology. Our national laboratory system can provide HPC modeling and simulation expertise and capabilities found few places in the world. Whether creating detailed theoretical equations or writing the millions of lines of code that allows these supercomputers to make increasingly precise predictions on product performance, the scientists and engineers at these laboratories can help spur American innovation, entrepreneurship and competitiveness. LLNL’s supercomputers take a large amount of data and drill down and analyze to provide a closer more accurate data set to provide enhanced insights on the value of demand response and storage. With more available data, Lawrence Livermore National Laboratory provides higher quality data that leads to better predictions for Californian energy security.

Power of Partnerships by the Council of Competivenes

At the Council on Competitiveness,we work towards increasing the competitiveness in the United States through our initiatives. Earlier this year, the Council launched one such initiative – the American Energy & Manufacturing Competitiveness (AEMC) Partnership. This 3-year effort is a partnership with the Department of Energy, Office of Energy Efficiency & Renewable Energy (EERE) through its Clean Energy Manufacturing Initiative (CEMI), to support leverage points in the innovation ecosystem by creating a public-private partnership (PPP) to achieve two goals: increase U.S. competitiveness in clean energy products and increase U.S. competitiveness in the manufacturing sector overall by increasing energy productivity.

Similar to the goal of the HPC4Energy incubator, where energy companies worked in partnership with Lawrence Livermore National Laboratory to use high performance computing, modeling and simulation to solve industry problems, EERE is working with the Council through the AEMC Partnership to determine exciting and profitable ways the public and private sector can work together. The Council convened leaders in industry, academia, national laboratories, labor, non-profit organizations and the government during four dialogues in 2013 to determine relevant PPP concepts where organizations across the innovation ecosystem can synergistically work together and amplify achievements. Following an extensive literature review and release of the Power of Partnerships by the Council of Competiveness, the first AEMC Partnership dialogue convened over 100 leaders with interest and expertise in clean energy and manufacturing on April 11-12, 2013 in Washington, D.C. to kick off the collection of ideas on PPPs. The second dialogue followed on June 20, 2013, hosted by President Lloyd Jacobs of the University of Toledo in Toledo, Ohio, focusing on broad PPP concepts including the power of advanced materials in achieving the two AEMC Partnership goals.

The third dialogue was co-hosted by Dr. Mark Little, Senior Vice President and Chief Technology Officer of General Electric in Niskayuna, New York on August 12-13, 2013. At this dialogue, the Council presented 5 PPP concepts to participants for their evaluation, in preparation to down-select to two concepts for the fourth dialogue. The fourth dialogue was co-hosted by Michael Splinter, Chairman of the Board of Applied Materials in Santa Clara, California on October 17, 2013. The Council presented the two PPP concepts for participants to evaluate and provide comments: Facilitating the Transition from Prototypes to Commercially Deployable Products and a Clean Energy Materials Accelerator. The Council collected perspectives and insights from dialogue participants to better understand industry needs and interest in preparation for an upcoming first-of-its-kind inaugural American Energy and Manufacturing Competitiveness Summit, which will be held on December 12, 2013 in Washington, D.C.

The inaugural AEMC Summit provides a venue for energy and manufacturing leaders to discuss innovations and future directions in the energy and manufacturing sectors. At this Summit, the Council will present two PPP concepts to Dr. Danielson, Assistant Secretary for Energy Efficiency and Renewable Energy cultivated during the AEMC Partnership dialogue series, in addition to convening panel discussions on the creating a resurgence in energy and manufacturing in the United States and increasing energy productivity and creating innovative energy products in an age of low-cost energy. Confirmed speakers for the inaugural AEMC Summit include the Honorable Ernest Moniz, United States Secretary of Energy, Mr. Michael Mansuetti, President of Robert Bosch, LLC, Ms. Amy Ericson, U.S. Country President, Alstom, and Mr. Norman R. Augustine, former chairman and CEO of Lockheed Martin.

Lab’s High Performance Computing Center Honored by HPCWire as 2013′s Best Application of Green Computing and Best Government-Industry Collaboration

During last week’s “Supercomputing 2013″ conference in Denver, Colorado, Lawrence Livermore National Laboratory was honored with HPCWire’s reader’s choice award for best application of green computing and the editor’s choice award for best government-industry collaboration. HPCWire is one of the country’s foremost high-performance computing-focused news organizations.

The reader’s choice award for best application of green computing was awarded to Livermore’s collaboration with IBM on the “Sequoia,” the world’s most energy efficient supercomputer. Sequoia is part of the Laboratory’s work on the Stockpile Stewardship program, helping to ensure the reliability of America’s nuclear arsenal. The editor’s choice award for best government-industry collaboration honored Livermore and IBM on the “Vulcan” supercomputer’s HPC4Energy incubator program. The HPC4Energy’s incubator program helps to illustrate the benefits of supercomputing to private industry through external application of supercomputer technologies and expertise to energy applications.

LAWRENCE LIVERMORE’S VULCAN BRINGS 5 PETAFLOPS COMPUTING POWER TO COLLABORATIONS WITH INDUSTRY AND ACADEMIA

The Vulcan supercomputer at Lawrence Livermore National Laboratory is now available for collaborative work with industry and research universities to advance science and accelerate the technological innovation at the heart of U.S. economic competitiveness.

A 5 petaflops (quadrillion floating point operations per second) IBM BlueGene/Q system, Vulcan will serve Lab-industry projects through Livermore’s High Performance Computing (HPC) Innovation Center as well as academic collaborations in support of DOE/National Nuclear Security Administration (NNSA) missions. The availability of Vulcan effectively raises the amount of computing at LLNL available for external collaborations by an order of magnitude.

“High performance computing is a key to accelerating the technological innovation that underpins U.S. economic vitality and global competitiveness,” said Fred Streitz, HPC Innovation Center director. “Vulcan offers a level of computing that is transformational, enabling the design and execution of studies that were previously impossible, opening opportunities for new scientific discoveries and breakthrough results for American industries.”

Six recently concluded industrial collaboration projects from Livermore Lab’s initiative called thehpc4ener https://darknet-tor.com for details. Beyond these examples the availability of Vulcan enables even larger systems to be simulated over longer time periods with greater fidelity and resolution.

In addition to publishable incubator programs, the HPC Innovation Center provides on-demand, proprietary access to Vulcan and connects companies and academic collaborators with LLNL’s computational scientists and engineers, and computer scientists to help solve high-impact problems across a broad range of scientific, technological and business fields. As projects are initiated, the HPC Innovation Center rapidly assembles teams of experts and identifies the computer systems needed to develop and deploy transformative solutions for sponsoring companies. The HPCIC draws on LLNL’s decades of investment in supercomputers, HPC ecosystems, and expertise, as well as the technology and know-how of HPC industry partners. Companies interested in access to Vulcan, along with other supercomputing services are invited to respond to the posted Notice of Opportunity at https://www.fbo.gov/index?s=opportunity&mode=form&id=3485e761166e4ebf1c9dbf6e42c5de9a&tab=core&_cview=0.

Vulcan also will serve as an HPC resource for LLNL’s Grand Challenge program and other collaborations involving the Laboratory’s Multi-programmatic and Institutional Computing effort. Such collaborations with academic and research institutions serve to advance science in fields of interest to DOE/NNSA including security, energy, bioscience, atmospheric science and next generation HPC technology.

On the November 2012 industry-standard Top500 list of the world’s fastest supercomputers, Vulcan would rank as the world’s sixth fastest HPC system. This makes it one of the world’s most powerful computing resources available for collaborative projects.

During its shakeout period, Vulcan was combined with the larger Sequoia system, producing some breakthrough computation, notably setting a world speed record of 504 billion events per second for a discrete event simulation – a collaboration with the Rensselaer Polytechnic Institute (RPI). This achievement opens the way for the scientific exploration of complex, planetary-sized systems. See https://www.llnl.gov/news/newsreleases/2013/Apr/NR-13-04-06.html

Housed in LLNL’s Terascale Facility, Vulcan consists of 24 racks, 24,576 compute nodes and 393,216 compute cores. For addition specs, see: https://computing.llnl.gov/?set=resources&page=OCF_resources#vulcan

Related Links:

- The High Performance Computing Innovation Center: http://hpcinnovationcenter.llnl.gov/

- PC4energy Incubator: http://hpc4energy.org/incubator/

- LLNL HPC systems: https://computing.llnl.gov/

- Advanced Simulation and Computing: https://asc.llnl.gov/

Founded in 1952, Lawrence Livermore National Laboratory (www.llnl.gov) provides solutions to our nation’s most important national security challenges through innovative science, engineering and technology. Lawrence Livermore National Laboratory is managed by Lawrence Livermore National Security, LLC for the U.S. Department of Energy’s National Nuclear Security Administration.

National Summit on Advancing Clean Energy Technologies

On May 16-17, 2011 in Washington, D.C., the Howard Baker Forum, the Bipartisan Policy Center and Lawrence Livermore National Laboratory led the National Summit on Advancing Clean Energy Technologies: Entrepreneurship and Innovation through High-Performance Computing.

The National Summit mobilized the extraordinary talent and insights of energy technologists and computational experts with the knowledge and experience of industry executives and public officials. During the event, speakers and panelists discussed the practical pathways necessary to improve America’s pursuit of energy and environmental security; economic growth and competitiveness; and the creation of next generation, high-tech jobs.

The National Summit was co-sponsored by the U.S. Chamber’s Institute for 21st Century Energy, the Council on Competitiveness, the American Energy Innovation Council, the National Venture Capital Association, Oak Ridge National Laboratory and the Science Coalition. More than 300 experts and practitioners from business, finance, industry, government, academia and the nation’s leading science and computing laboratories attended and contributed to the two-day event. The National Summit explored ways that the high-performance computing (HPC) capabilities of our national laboratories can help our nation meet this century’s energy challenges by expediting the commercialization of clean energy technologies.

The National Summit was organized with the goal of producing an actionable, national roadmap for advancing energy technologies through the application of HPC and modeling and simulation. The National Summit was the first step in this process. Knowledge and the application of HPC can provide an edge to American entrepreneurs and companies and hasten implementation of crucial new technologies by substantially reducing development time and cost. The United States is a world leader in HPC and advanced simulation applications, and the national laboratory system can provide expertise and capabilities at a level found few places in the world.

National Summit partners released the Report on a National Summit on Advancing Clean Energy Technologies in October 2011. In accordance with that report, this website serves as a one-stop-shop for what companies need to know in order to leverage the valuable HPC assets available here in the United States.

How HPC Can Help

High-performance computing can provide an edge to American entrepreneurs and companies and hasten the implementation of crucial new technologies by substantially reducing development time and cost. The United States is a world leader in high-performance computing and advanced simulation applications. By testing a new concept or product in virtual space, HPC modeling and simulation dramatically reduces the number of physical prototypes necessary to bring a product to market. By shortening the development window, HPC gives American companies an edge in an increasingly competitive global marketplace.

Companies such as Boeing, Goodyear and Siemens have all used HPC modeling and simulation to develop new and innovative products. Boeing used high-performance computing to reduce the number of wing prototypes from 77 in previous aircraft models to just 7 for the 787 Dreamliner. Goodyear partnered with Sandia National Laboratory to reduce development time and cost for their all-season tire TripleTred Technology. Siemens is partnering with Lawrence Livermore National Laboratory to create detailed wind forecasting models to improve the efficiency of individual wind turbines and the performance of entire wind farms.

Our national laboratory system can provide HPC modeling and simulation expertise and capabilities found few places in the world. Whether creating detailed theoretical equations or writing the millions of lines of code that allow these supercomputers to make increasingly precise predictions on product performance, the scientists and engineers at th

Ohio rolls out ‘AweSim’ supercomputing initiative

Ohio officials rolled out a new super-computing initiative this week in Denver aimed at small and mid-sized manufacturers.

The Ohio Supercomputer Center introduced the program, named AweSim, during the SC13 conference, an international showcase related to high performance computing, networking, storage and analysis.

The center says AweSim will provide the benefits of computer modeling and simulation-design to the targeted manufacturing companies through easy-to-use apps.

The $6.4 million public-private initiative is funded through Ohio’s Third Frontier Commission as well as investments by Procter & Gamble, Intel, AltaSim Technologies, TotalSim USA, Kinetic Vision and Nimbus Services.

Backers used the conference to identify potential new collaborators in the project.